- #1

Math Amateur

Gold Member

MHB

- 3,990

- 48

I am reading Hugo D. Junghenn's book: "A Course in Real Analysis" ...

I am currently focused on Chapter 9: "Differentiation on Rn" role="presentation">Rn"

I need some help with an aspect of Theorem 9.1.10 ...

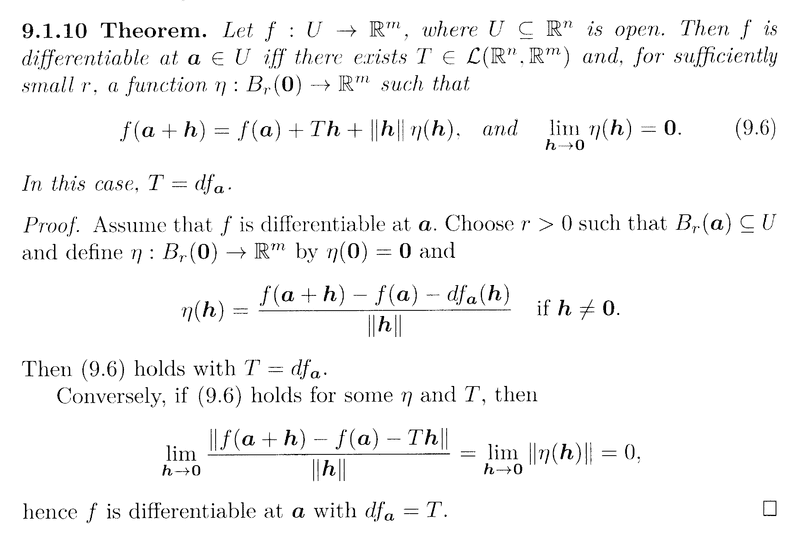

Theorem 9.1.10 reads as follows:

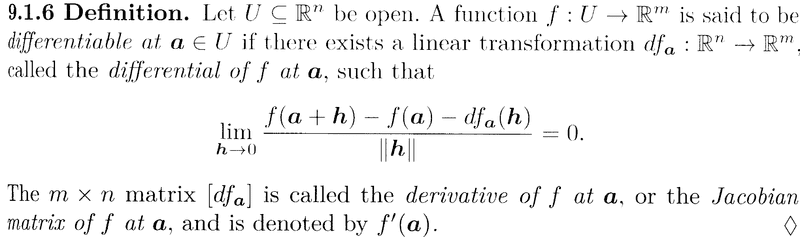

The proof of Theorem 9.1.10 relies on the definition of the derivative of a vector-valued function of several variables ... that is, Definition 9.1.6 ... so I am providing the same ... as follows:

In Junghenn's proof of Theorem 9.1.10 above, we read the following:

" ... ... and

##\eta (h) = \frac{ f(a + h ) - f(a) - df_a (h) }{ \| h \| }## if ##h \neq 0##

... ... "Now there are no norm signs around this expression (with the exception of around ##h## in the denominator ...) ... and indeed no norm signs around the expression ##\lim_{ h \rightarrow 0 } \eta(h) = 0## ... nor indeed are there any norm signs in the limit shown in Definition 9.1.6 above (with the exception of around ##h## in the denominator ...) ...

... BUT ...

... ... this lack of norm signs seems in contrast to the last few lines of the proof of Theorem 9.1.10 as follows ... where we read ...

" ... ... Conversely if (9.6) holds for some ##\eta## and ##T##, then##\lim_{ h \rightarrow 0 } \frac{ \| f( a + h ) - f(a) - Th \| }{ \| h \| } = \lim_{ h \rightarrow 0 } \| \eta(h) \| = 0##... ... "Here, in contrast to the case above, there are norm signs around the numerator and indeed around ##\eta(h)## ... ...

Can someone please explain why norm signs are used in the numerator and, indeed, around ##\eta(h)## in one case ... yet not the other ...

Help will be appreciated ...

Peter

I am currently focused on Chapter 9: "Differentiation on Rn" role="presentation">Rn"

I need some help with an aspect of Theorem 9.1.10 ...

Theorem 9.1.10 reads as follows:

The proof of Theorem 9.1.10 relies on the definition of the derivative of a vector-valued function of several variables ... that is, Definition 9.1.6 ... so I am providing the same ... as follows:

In Junghenn's proof of Theorem 9.1.10 above, we read the following:

" ... ... and

##\eta (h) = \frac{ f(a + h ) - f(a) - df_a (h) }{ \| h \| }## if ##h \neq 0##

... ... "Now there are no norm signs around this expression (with the exception of around ##h## in the denominator ...) ... and indeed no norm signs around the expression ##\lim_{ h \rightarrow 0 } \eta(h) = 0## ... nor indeed are there any norm signs in the limit shown in Definition 9.1.6 above (with the exception of around ##h## in the denominator ...) ...

... BUT ...

... ... this lack of norm signs seems in contrast to the last few lines of the proof of Theorem 9.1.10 as follows ... where we read ...

" ... ... Conversely if (9.6) holds for some ##\eta## and ##T##, then##\lim_{ h \rightarrow 0 } \frac{ \| f( a + h ) - f(a) - Th \| }{ \| h \| } = \lim_{ h \rightarrow 0 } \| \eta(h) \| = 0##... ... "Here, in contrast to the case above, there are norm signs around the numerator and indeed around ##\eta(h)## ... ...

Can someone please explain why norm signs are used in the numerator and, indeed, around ##\eta(h)## in one case ... yet not the other ...

Help will be appreciated ...

Peter