- #1

- 18,853

- 13,803

phinds submitted a new PF Insights post

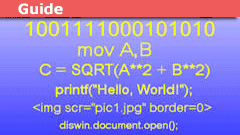

Computer Langauge Primer - Part 1

Continue reading the Original PF Insights Post.

Computer Langauge Primer - Part 1

Continue reading the Original PF Insights Post.

Oh, I didn't even get started on the early days. No mention of punched card decks, teletypes, paper tape machines and huge clean rooms with white-coated machine operators to say nothing of ROM burners for writing your own BIOS in the early PC days, and on and on. I could have done a LONG trip down memory lane without really telling anyone much of any practical use for today's world, but I resisted the urgeanorlunda said:@phinds , thanks for the trip down memory lane.

AAACCCKKKKK ! That reminds me that now I have to write part 2. Damn !Greg Bernhardt said:Great part 1 phinds!

I thought it was spelled ACK...phinds said:AAACCCKKKKK !

No, that's a minor ACK. MIne was a heartfelt, major ACK, generally written as AAACCCKKKKK !Mark44 said:I thought it was spelled ACK...

(As opposed to NAK)

No choice.phinds said:AAACCCKKKKK ! That reminds me that now I have to write part 2.

Yeah but part 2 is likely to be the alternate interpretation of the acronym for ASCII 08256bits said:No choice.

You have sent an ASCII 06.

Full Duplex mode?

ASCII 07 will get the attention for part 2.

Nuts. You are right of course. I spent so much time programming the 8080 on CPM systems that I forgot that IBM went with the 8088. I'll make a change. Thanks.rcgldr said:"8080 instruction guide (the CPU on which the first IBM PCs were based)." The first IBM PC's were based on 8088, same instruction set as 8086, but only an 8 bit data bus. The Intel 8080, 8085, and Zilog Z80 were popular in the pre-PC based systems, such as S-100 bus systems, ...

The number of things that I COULD have brought in, that have little or no relevance to modern computing, would have swamped the whole article.rcgldr said:No mention of plugboard programming.

phinds said:Yes, there are a TON of such fairly obscure points that I could have brought in, but the article was too long as is and that level of detail was just out of scope.

stevendaryl said:Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.

This was NOT intended as a thoroughly exhaustive discourse. If you look at the wikipedia list of languages you'll see that I left out more than I put in but that was deliberate.David Reeves said:One thing I noticed is that although you mention LISP, you did not mention the topic of languages for artificial intelligence. I did not see any mention of Prolog, which was the main language for the famous 5th Generation Project in Japan.

And yes I could have written thousands of pages on all aspects of computing. I chose not to.It could also be useful to discuss functional programming languages or functional programming techniques in general.

See aboveSince you mention object-oriented programming and C++, how about also mentioning Simula, the language that started it all, and Smalltalk, which took OOP to what some consider an absurd level.

Pascal is listed but not discussed. See aboveFinally, I do not see any mention of Pascal, Modula, and Oberon. The work on this family of languages by Prof. Wirth is one of the greatest accomplishments in the history of computer languages.

Basically, I think most people see "scripting" in two ways. First is, for example, BASIC which is an interpreted computer language and second is, for example, Perl, which is a command language. The two are quite different but I'm not going to get into that. It's easy to find on the internet.stevendaryl said:Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.

NUTS, again. Yes, you are correct. I actually found that all out AFTER I had done the "final" edit and just could not stand the thought of looking at the article for the 800th time so I left it in. I'll make a correction. Thanks.jedishrfu said:One word of clarification on the history of markup is that while HTML is considered to be the first markup language it was in fact adapted from the SGML(1981-1986) standard of Charles Goldfarb by Sir Tim Berners-Lee:

stevendaryl said:Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.

David Reeves said:Speaking of scripting languages, consider Lua, which is the most widely used scripting language for game development. Within a development team, some programmers may only need to work at the Lua script level, without ever needing to modify and recompile the core engine. For example, how a certain game character behaves might be controlled by a Lua script. This sort of scripting could also be made accessible to the end users. But Lua is not an interpreted language.

stevendaryl said:Something that I was never clear on was what made a "scripting language" different from an "interpreted language"? I don't see that much difference in principle between Javascript and Python, on the scripting side, and Java, on the interpreted side, other than the fact that the scripting languages tend to be a lot more loosey-goosey about typing.

Thanks.vela said:Another typo: I believe you meant "fourth generation," not "forth generation."

jedishrfu said:Scripting languages usually can interact with the command shell that you're running them in. They are interpreted and can evaluate expressions that are provided at runtime.

jedishrfu said:Java is actually compiled into bytecodes that act as machine code for the JVM. This allows java to span many computing platforms in a write once run anywhere kind of way.

vela said:Another typo: I believe you meant "fourth generation," not "forth generation."

To repeat myself:nsaspook said:Also missing is the language Forth.

This was NOT intended as a thoroughly exhaustive discourse. If you look at the wikipedia list of languages you'll see that I left out more than I put in but that was deliberate.