- #1

Math Amateur

Gold Member

MHB

- 3,998

- 48

I am reading Matej Bresar's book, "Introduction to Noncommutative Algebra" and am currently focussed on Chapter 1: Finite Dimensional Division Algebras ... ...

I need help with some remarks of Bresar on the centre of an algebra ...

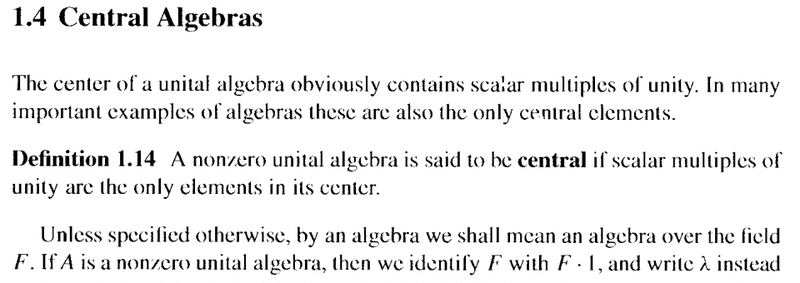

Commencing a section on Central Algebras, Bresar writes the following:

In the above text we read the following:

" ... The center of a unital algebra obviously contains scalar multiples of unity ... ... "Now the center of a unital algebra ##A## is defined as the set ##Z(A)## such that

##Z(A) = \{ c \in A \ | \ cx = xc \text{ for all x } \in A \}##Now ... clearly ##1 \in Z(A)## since ##1x = x1## for all x ...

BUT ... why do elements like ##3## belong to ##Z(A)## ... ?

That is ... how would we demonstrate that ##3x = x3## for all ##x \in A## ... ?

Hope someone can help ...

Peter

I need help with some remarks of Bresar on the centre of an algebra ...

Commencing a section on Central Algebras, Bresar writes the following:

In the above text we read the following:

" ... The center of a unital algebra obviously contains scalar multiples of unity ... ... "Now the center of a unital algebra ##A## is defined as the set ##Z(A)## such that

##Z(A) = \{ c \in A \ | \ cx = xc \text{ for all x } \in A \}##Now ... clearly ##1 \in Z(A)## since ##1x = x1## for all x ...

BUT ... why do elements like ##3## belong to ##Z(A)## ... ?

That is ... how would we demonstrate that ##3x = x3## for all ##x \in A## ... ?

Hope someone can help ...

Peter