- #1

Math Amateur

Gold Member

MHB

- 3,998

- 48

I am reading Dummit and Foote: Abstract Algebra (Third Edition) ... and am focused on Section 11.5 Tensor Algebras. Symmetric and Exterior Algebras ...

In particular I am trying to understand Theorem 31 but at present I am very unsure about how to interpret the theorem and need some help in understanding the basic form of the elements involved and the mechanics of computations ... so would appreciate any help however simple ...

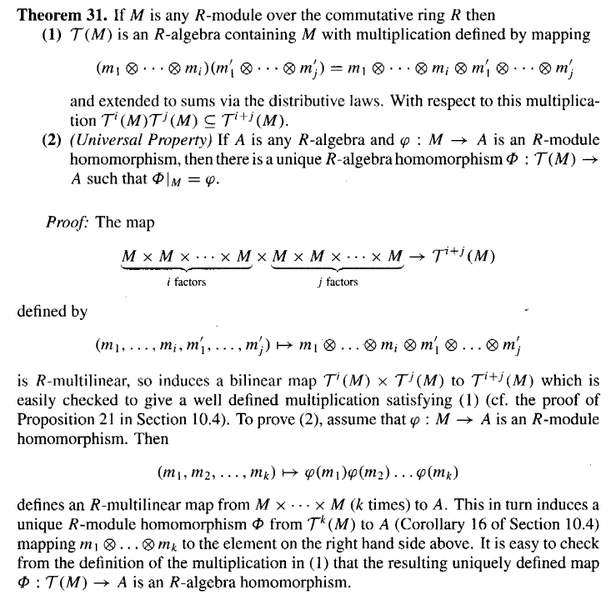

Theorem 31 and its proof read as follows:

My (rather simple) questions are as follows:Question 1

In the above text from D&F we read the following:" ... ... ##\mathcal{T} (M)## is an ##R##-Algebra containing ##M## with multiplication defined by the mapping:

##( m_1 \otimes \ ... \ \otimes m_i ) ( m'_1 \otimes \ ... \ \otimes m'_j ) = m_1 \otimes \ ... \ \otimes m_i \otimes m'_1 \otimes \ ... \ \otimes m'_j ##

... ... ... "... my questions are as follows:

What do the distributive laws look like in this case ... and would sums of elements be just formal sums ... or would we be able to add elements in the same sense as in the ring ##\mathbb{Z}## where the sum ##2+3## gives an entirely different element ##5## ... ?

Further, how do we know that with respect to multiplication ##\mathcal{T}^{i} (M) \ \mathcal{T}^{j} (M) \subseteq \mathcal{T}^{i+j} (M)## ... ... ?

Question 2

In the proof we read the following:"The map

## \underbrace{ M \times M \times \ ... \ \times M }_{ i \ factors} \times \underbrace{ M \times M \times \ ... \ \times M }_{ j \ factors} \longrightarrow \mathcal{T}^{i+j} (M) ##

defined by

##(m_1, \ ... \ , m_i, m'_1, \ ... \ , m'_j) \mapsto m_1 \otimes \ ... \ ... \ \otimes m_i \otimes m'_1 \otimes \ ... \ ... \ \otimes m'_j ##

is ##R##-multilinear, so induces a bilinear map ## \mathcal{T}^{i} (M) \times \mathcal{T}^{j} (M)## to ##\mathcal{T}^{i+j} (M)## ... ... "My questions are:

... what does the multlinearity of the above map look like ... ?

and

... how do we demonstrate that the above map induces a bilinear map ## \mathcal{T}^{i} (M) \times \mathcal{T}^{j} (M)## to ##\mathcal{T}^{i+j} (M)## ... ... ? How/why is this the case... ?Hope someone can help ...

Peter

In particular I am trying to understand Theorem 31 but at present I am very unsure about how to interpret the theorem and need some help in understanding the basic form of the elements involved and the mechanics of computations ... so would appreciate any help however simple ...

Theorem 31 and its proof read as follows:

My (rather simple) questions are as follows:Question 1

In the above text from D&F we read the following:" ... ... ##\mathcal{T} (M)## is an ##R##-Algebra containing ##M## with multiplication defined by the mapping:

##( m_1 \otimes \ ... \ \otimes m_i ) ( m'_1 \otimes \ ... \ \otimes m'_j ) = m_1 \otimes \ ... \ \otimes m_i \otimes m'_1 \otimes \ ... \ \otimes m'_j ##

... ... ... "... my questions are as follows:

What do the distributive laws look like in this case ... and would sums of elements be just formal sums ... or would we be able to add elements in the same sense as in the ring ##\mathbb{Z}## where the sum ##2+3## gives an entirely different element ##5## ... ?

Further, how do we know that with respect to multiplication ##\mathcal{T}^{i} (M) \ \mathcal{T}^{j} (M) \subseteq \mathcal{T}^{i+j} (M)## ... ... ?

Question 2

In the proof we read the following:"The map

## \underbrace{ M \times M \times \ ... \ \times M }_{ i \ factors} \times \underbrace{ M \times M \times \ ... \ \times M }_{ j \ factors} \longrightarrow \mathcal{T}^{i+j} (M) ##

defined by

##(m_1, \ ... \ , m_i, m'_1, \ ... \ , m'_j) \mapsto m_1 \otimes \ ... \ ... \ \otimes m_i \otimes m'_1 \otimes \ ... \ ... \ \otimes m'_j ##

is ##R##-multilinear, so induces a bilinear map ## \mathcal{T}^{i} (M) \times \mathcal{T}^{j} (M)## to ##\mathcal{T}^{i+j} (M)## ... ... "My questions are:

... what does the multlinearity of the above map look like ... ?

and

... how do we demonstrate that the above map induces a bilinear map ## \mathcal{T}^{i} (M) \times \mathcal{T}^{j} (M)## to ##\mathcal{T}^{i+j} (M)## ... ... ? How/why is this the case... ?Hope someone can help ...

Peter

Attachments

Last edited: