- #1

Math Amateur

Gold Member

MHB

- 3,998

- 48

I am reading John M. Lee's book: Introduction to Smooth Manifolds ...

I am focused on Chapter 3: Tangent Vectors ...

I need some help in fully understanding Lee's conversation on computations with tangent vectors and pushforwards ... in particular I need help with a further aspect of Lee's exposition of pushforwards in coordinates concerning a map [itex]F: M \longrightarrow N[/itex] between smooth manifolds [itex]M[/itex] and [itex]N[/itex] ... ...

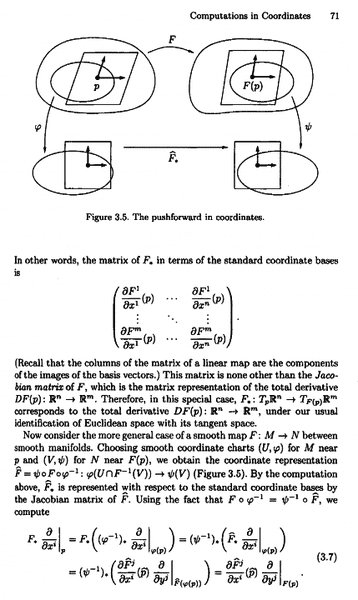

The relevant conversation in Lee is as follows:

In the above text, equation 3.7 reads as follows:

In the above text, equation 3.7 reads as follows:

" ... ...

[itex]F_* \frac{ \partial }{ \partial x^i } |_p = F_* ( ( \phi^{-1} )_* \ \frac{ \partial }{ \partial x^i } |_{\phi(p)}) [/itex][itex]= ( \psi^{-1} )_* \ ( \tilde{F}_* \frac{ \partial }{ \partial x^i } |_{\phi(p)} )[/itex][itex]= ( \psi^{-1}_* ) ( \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p}) \frac{ \partial }{ \partial y^j }|_{ \tilde{F} ( \phi (p))} )[/itex][itex]= \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p} ) \frac{ \partial }{ \partial y^j }|_{ F (p) }[/itex]... ... ... ... ... 3.7

... ... ... "

I cannot see how Equation 3.7 is derived ... can someone please help ...

Specifically, my questions are as follows:Question 1

What is the explicit logic and justification for the step

[itex]F_* ( ( \phi^{-1} )_* \ \frac{ \partial }{ \partial x^i } |_{\phi(p)})[/itex] [itex]= ( \psi^{-1} )_* \ ( \tilde{F}_* \frac{ \partial }{ \partial x^i } |_{\phi(p)} )[/itex]

Question 2

What is the explicit logic and justification for the step[itex]= ( \psi^{-1} )_* \ ( \tilde{F}_* \frac{ \partial }{ \partial x^i } |_{\phi(p)} )[/itex][itex]= ( \psi^{-1}_* ) ( \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p}) \frac{ \partial }{ \partial y^j }|_{ \tilde{F} ( \phi (p))} )[/itex]

Question 3

What is the explicit logic and justification for the step

[itex] ( \psi^{-1}_* ) ( \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p}) \frac{ \partial }{ \partial y^j }|_{ \tilde{F} ( \phi (p))} )[/itex][itex]= \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p} ) \frac{ \partial }{ \partial y^j }|_{ F (p) }[/itex]

As you can see ... I am more than slightly confused by equation 3.7 ... hope someone can help ...Peter

===========================================================

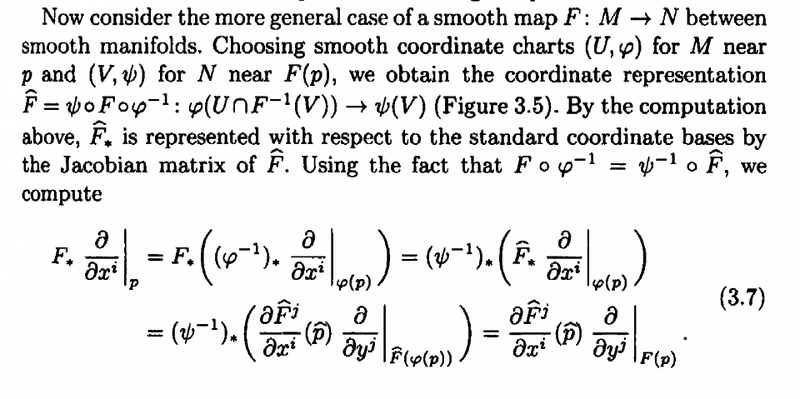

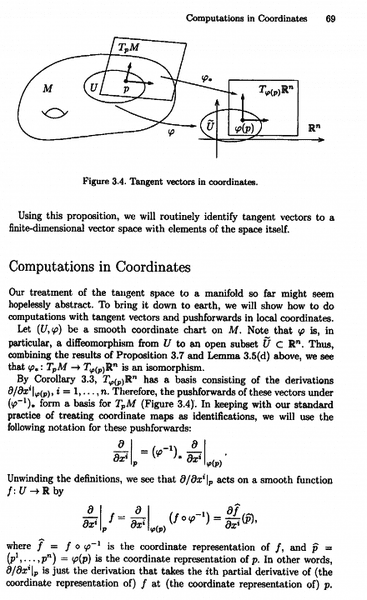

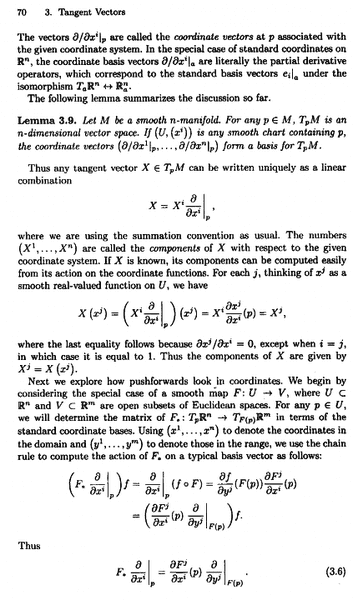

To give readers the notation and context for the above I am providing the text of Lee's section on Computations in Coordinates (pages 69 -72) ... ... as follows:

I am focused on Chapter 3: Tangent Vectors ...

I need some help in fully understanding Lee's conversation on computations with tangent vectors and pushforwards ... in particular I need help with a further aspect of Lee's exposition of pushforwards in coordinates concerning a map [itex]F: M \longrightarrow N[/itex] between smooth manifolds [itex]M[/itex] and [itex]N[/itex] ... ...

The relevant conversation in Lee is as follows:

" ... ...

[itex]F_* \frac{ \partial }{ \partial x^i } |_p = F_* ( ( \phi^{-1} )_* \ \frac{ \partial }{ \partial x^i } |_{\phi(p)}) [/itex][itex]= ( \psi^{-1} )_* \ ( \tilde{F}_* \frac{ \partial }{ \partial x^i } |_{\phi(p)} )[/itex][itex]= ( \psi^{-1}_* ) ( \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p}) \frac{ \partial }{ \partial y^j }|_{ \tilde{F} ( \phi (p))} )[/itex][itex]= \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p} ) \frac{ \partial }{ \partial y^j }|_{ F (p) }[/itex]... ... ... ... ... 3.7

... ... ... "

I cannot see how Equation 3.7 is derived ... can someone please help ...

Specifically, my questions are as follows:Question 1

What is the explicit logic and justification for the step

[itex]F_* ( ( \phi^{-1} )_* \ \frac{ \partial }{ \partial x^i } |_{\phi(p)})[/itex] [itex]= ( \psi^{-1} )_* \ ( \tilde{F}_* \frac{ \partial }{ \partial x^i } |_{\phi(p)} )[/itex]

Question 2

What is the explicit logic and justification for the step[itex]= ( \psi^{-1} )_* \ ( \tilde{F}_* \frac{ \partial }{ \partial x^i } |_{\phi(p)} )[/itex][itex]= ( \psi^{-1}_* ) ( \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p}) \frac{ \partial }{ \partial y^j }|_{ \tilde{F} ( \phi (p))} )[/itex]

Question 3

What is the explicit logic and justification for the step

[itex] ( \psi^{-1}_* ) ( \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p}) \frac{ \partial }{ \partial y^j }|_{ \tilde{F} ( \phi (p))} )[/itex][itex]= \frac{ \partial \tilde{F}^j }{ \partial x^i } ( \tilde{p} ) \frac{ \partial }{ \partial y^j }|_{ F (p) }[/itex]

As you can see ... I am more than slightly confused by equation 3.7 ... hope someone can help ...Peter

===========================================================

To give readers the notation and context for the above I am providing the text of Lee's section on Computations in Coordinates (pages 69 -72) ... ... as follows: