- #1

LCSphysicist

- 646

- 161

- Homework Statement

- I am not sure if i am interpreting it right, but i think yes.

- Relevant Equations

- All below

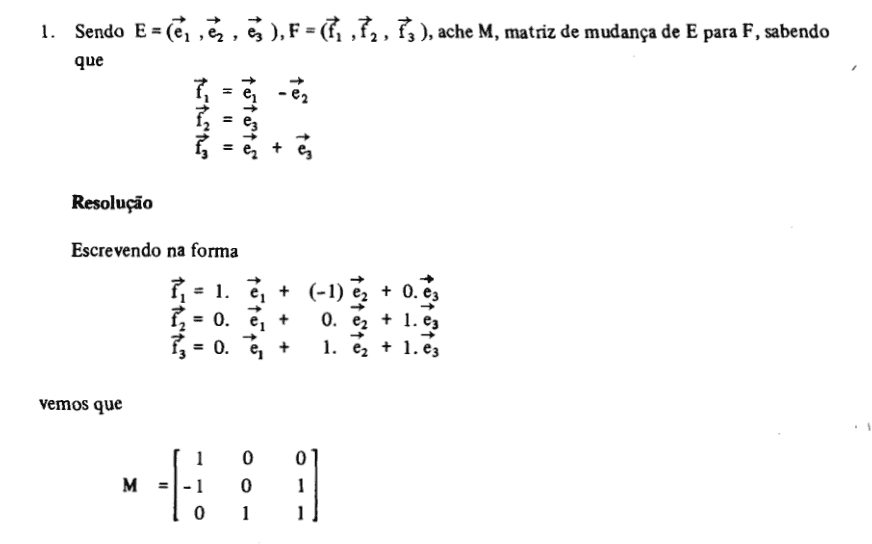

See this exercise: It ask for the matrix changing the basis E -> F

If you pay attention, it write F in terms of E and write the matrix.

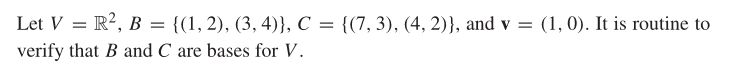

Now see this another exercise:

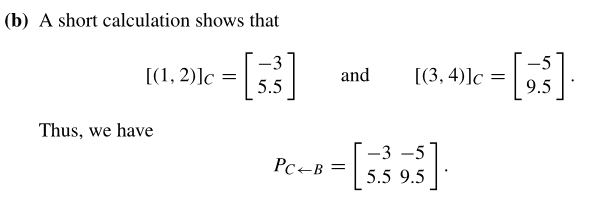

It ask the matrix B -> C, writing B in terms of C

It ask the matrix B -> C, writing B in terms of C

Which is correct? If it are essentially equal, where am i interpreting wrong?

If you pay attention, it write F in terms of E and write the matrix.

Now see this another exercise:

Which is correct? If it are essentially equal, where am i interpreting wrong?

XD

XD