- #1

Juanda

- 303

- 106

- TL;DR Summary

- I want to find the probability of adding random numbers with the result being within a certain bracket.

I am not a fan of random and statistics. I know it is extremely useful and probably the mathematical branch more applicable to real life to understand the world around us but I am a Calculus and Vectors boy. This problem though I find interesting. I would like to find a generalized solution for the following situation. It is related to the study of the tolerances in manufacturing. I am certain it's got more applications but that's how it occurred to me. To be honest, I don't think I will be able to apply this in real life because I don't have access to the probability distributions (although I could make some educated guesses) but the problem is still interesting.

Let's say we are adding two numbers. The result is simple.

$$2+3=5$$

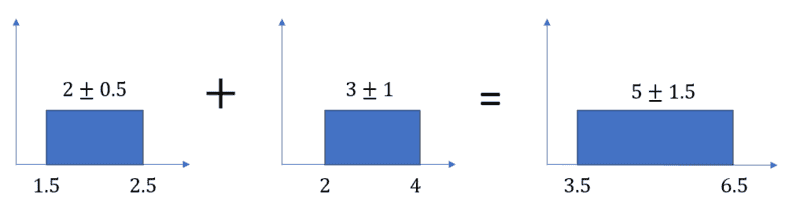

However, let's now think of a scenario where 2 and 3 are not set. Instead, they follow a probability distribution. To start with something simple, let's assume they are constant distributions as shown.

In this case, I believe that's right so I know how to do it.

$$(2\pm 0.5)+(3\pm 1)=5\pm 1.5$$

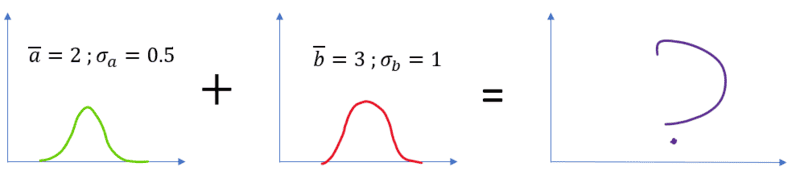

But if the probability distributions get more complex I do not know how I could try to solve it. For example:

I used a normal distribution but I am certain there must be a generalized way to calculate it for any given probability distribution. I am interested in being able to answer the following:

Given the number ##a## with this probability distribution (whichever) and the number ##b## with this probability distribution (whichever), what is the probability that the sum c falls within the numbers ##e## and ##d##.

Similarly, the following question would be interesting to answer:

Given the target of the number ##c## being within the limits caused by ##e## and ##d## with a 95% certainty, how should ##a## and ##b## be given that they follow this probability distribution (whichever)?

This second question would look a lot like what a designer would need to face when choosing the tolerances of machined parts. The first one allows us to check if what was chosen is right. The second one is like trying to find the best answer directly.

I am aware that the second question has infinite solutions because the problem is not sufficiently defined. I don't know how to restrain it a little more so it is still realistic and will spit out the best possible solution directly. I would guess an additional restraint would be to force the tolerance brackets to be as big as possible in both numbers so the parts are easier to manufacture. "Big" is relative, so I would define big as a percentage of the average.

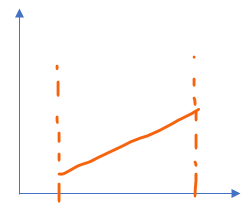

As a last bonus question, I mentioned at the top of the post that the applicability of this is limited by the actual knowledge of the probability distributions present in machined parts. Let's imagine I ask for 100 cylinders to be machined to 50±0.5 mm in diameter. I believe the machinist will keep removing material with his lathe until he's within the tolerance bracket. He has no particular interest in giving me the part being as close to the nominal value as possible since that risks scrapping a part because he removed more material than necessary. Therefore, my current guess is that the distributions must look somewhat like this:

That is only an educated guess in which even psychology is involved. The machinist might be somewhat of a perfectionist and he wants to get close to the average value. The only way to know would be to take all manufactured parts and get the probability distribution but that implies the job is already done so you cannot modify the tolerance bracket in the design to nail the target you initially had in mind. Is there a best way to approach this? What probability distribution would you apply?

So far, all my work is in low volumes so even if I knew the details of this problem there is little chance they would be representative enough to apply it but I still think it's an interesting matter to think about and it might be more useful in the future. Knowledge is power.

Let me know your thoughts.

Thanks in advance.

Let's say we are adding two numbers. The result is simple.

$$2+3=5$$

However, let's now think of a scenario where 2 and 3 are not set. Instead, they follow a probability distribution. To start with something simple, let's assume they are constant distributions as shown.

In this case, I believe that's right so I know how to do it.

$$(2\pm 0.5)+(3\pm 1)=5\pm 1.5$$

But if the probability distributions get more complex I do not know how I could try to solve it. For example:

I used a normal distribution but I am certain there must be a generalized way to calculate it for any given probability distribution. I am interested in being able to answer the following:

Given the number ##a## with this probability distribution (whichever) and the number ##b## with this probability distribution (whichever), what is the probability that the sum c falls within the numbers ##e## and ##d##.

Similarly, the following question would be interesting to answer:

Given the target of the number ##c## being within the limits caused by ##e## and ##d## with a 95% certainty, how should ##a## and ##b## be given that they follow this probability distribution (whichever)?

This second question would look a lot like what a designer would need to face when choosing the tolerances of machined parts. The first one allows us to check if what was chosen is right. The second one is like trying to find the best answer directly.

I am aware that the second question has infinite solutions because the problem is not sufficiently defined. I don't know how to restrain it a little more so it is still realistic and will spit out the best possible solution directly. I would guess an additional restraint would be to force the tolerance brackets to be as big as possible in both numbers so the parts are easier to manufacture. "Big" is relative, so I would define big as a percentage of the average.

As a last bonus question, I mentioned at the top of the post that the applicability of this is limited by the actual knowledge of the probability distributions present in machined parts. Let's imagine I ask for 100 cylinders to be machined to 50±0.5 mm in diameter. I believe the machinist will keep removing material with his lathe until he's within the tolerance bracket. He has no particular interest in giving me the part being as close to the nominal value as possible since that risks scrapping a part because he removed more material than necessary. Therefore, my current guess is that the distributions must look somewhat like this:

That is only an educated guess in which even psychology is involved. The machinist might be somewhat of a perfectionist and he wants to get close to the average value. The only way to know would be to take all manufactured parts and get the probability distribution but that implies the job is already done so you cannot modify the tolerance bracket in the design to nail the target you initially had in mind. Is there a best way to approach this? What probability distribution would you apply?

So far, all my work is in low volumes so even if I knew the details of this problem there is little chance they would be representative enough to apply it but I still think it's an interesting matter to think about and it might be more useful in the future. Knowledge is power.

Let me know your thoughts.

Thanks in advance.